by Chris Pehura — C-SUITE DATA — 2024/03/07

Email us at contactus@csuitedata.com to see how we make your plans work.

AI is here and AI is here to stay. AI has so many fails but it’s not the fault of AI. It is the fault that we have with our expectations of AI. When we hear about Artificial Intelligence we think intelligence. We think of something with a face. It might be a cute little girl or it might be a rundown cyborg that wants to destroy us all. But we give it a face and because we give it a face we have an emotional connection to it; an emotional relationship with it. That’s why we trust it, or we despise it or we hate it. This gives us unrealistic expectations for AI. Especially the intelligence behind AI is just a bunch of things interconnected with another bunch of things all meshed together. That’s all it is. There is no intelligence to AI. There is nothing human about AI so we should stop treating it as being an equivalent of a human of having human intelligence. We have to realign our expectations and use the tool in a much better way. We need to better manage our expectations for using the AI tools.

Google lost 90 billion worth of market value because its Artificial Intelligence, Gemini, produced some pretty controversial stuff things about black Nazis and things about pedophilia. People didn’t take kindly to that but this shouldn’t be unexpected. AI is just a tool and this 90 billion market value number is a good indication of how unrealistic our expectations are for using AI, for using the tool. Just because we call it Artificial Intelligence doesn’t mean it’s intelligent. Let’s get into the main fails for Artificial Intelligence.

5 — Mixed up Differences with Similarities

AI likes saying different things are the same and the same things are different. The reason why AI does this is that it takes a concept and breaks it down into pieces and the similarities and the differences, well, they are lost in the breakdown. So AI doesn’t even know that those things exist. So AI will jump the gun, make conclusions that say some things are similar some things are different, or it might even mix up things together, saying that they’re equivalent. For instance, if you wanted AI to talk about ethics and morals you will find that AI will use those terms interchangeably in its explanation. The same thing if you were to use AI for project management training versus leadership training you will find a very strong impression that program management and leadership are equivalent in a lot of areas which is not the case if you know how to do one versus the other. AI likes to mix things up. You got to make sure when you look at AI that it is doing those comparisons, those equivalencies, those differences, they are doing them the right way. Because if you don’t you’re going to come to the wrong conclusion.

4 — Popularity is not Correctness

A major failure for AI is that AI knows what’s popular. It doesn’t know what’s correct and it sure doesn’t know what’s right. Just what’s popular. When AI is taught, when it is trained, it goes through large amounts of data to look for patterns within that data. If it sees a piece of data that shows up more often than something else the AI is going to assume that data is more important and that it is more correct and that’s often not the case. Data sets are noisy they have gaps. They have bad data in there and often the bad data overshadows the good data. So the AI makes some ridiculous assumptions. For instance, in a data set if you have these news articles you could see keywords like DEI and Nazi show up in the same article all the time. And this might give the AI a pattern, a strong connection between the two that they are related, that DEI and Nazis are related. And what’s the result? We get black Nazis which has nothing to do with Nazis, Nazis Germany. But the AI doesn’t have common sense. AI only knows what is extrapolated from the data. It doesn’t know the source or historical context of that data. It just doesn’t. The AI doesn’t have common sense. So when you are looking at data, when you are looking at Artificial Intelligence and what it produces you have to take into consideration that if it is saying popular things it is outright wrong. You need to make sure that the AI gives out balanced strategies, balanced steps, and balanced judgments.

3 — Reassembled Wrong

AI fails with the secret sauce. AI assembles things wrong. When AI gives you a solution all those pieces will be the correct pieces but they’ll have the wrong priority, or they might be interconnected in the wrong way. And the overall assembly of the idea, of the solution, is in the wrong order. This is pretty typical with AI and you can see this when you want the AI to generate a piece of code, a strategy, a plan, a course. This is pretty common with AI and it’s very obvious that AI doesn’t know the secret sauce for a solution. It doesn’t have that subject matter expertise. We need to have that expertise so it should be expected that when we get something from AI we’re getting all the pieces that we need from the AI then we reassemble and we improve on what the AI has produced. That is where our value is. And that’s how we should be using AI.

2 — Order specifies Priority and Context

When you interact with AI you have to order your commands, your words, your instructions, and your code in a specific way. AI assumes what you give first is more important than what you give second. So if I was to type in “project training” into a chat AI then it would think “project” is more important than “training” and then it would give me a specific result. But if I was to type “training project” I’d get a different result, often very different results. Especially, if I put in “management project training” versus “project management training”. The order becomes extremely important. The AI doesn’t have the common sense to know what are the important words or how to process those important words. It is guessing so when we are using AI, we have to be very cautious about putting the right words, the more important words first within our prompts, within our interactions, and within our code.

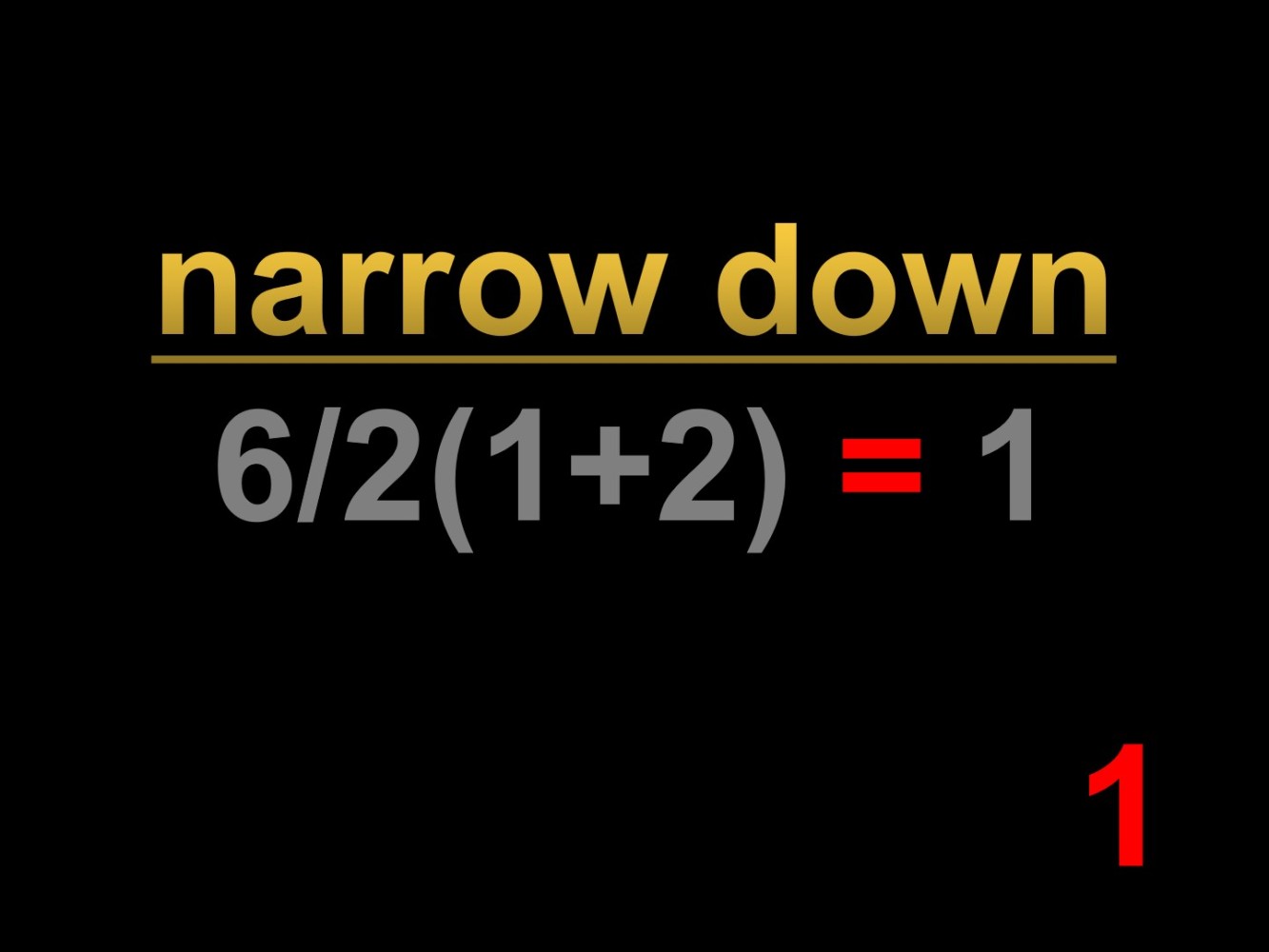

1 — No Conversational Analysis

The biggest fail for AI is that AI likes to narrow things down to identify a solution. We, when we put together a solution we don’t do that. We have a conversation where we go from general to specific back to general again. We oscillate back and forth. AI doesn’t do that. AI goes to optimize it, narrows down so it can only go in one direction. It can’t do the oscillation so this can run us into some trouble when we forget to do that, where we forget to reset our chat, or reset our history so that AI is going from general to specific. We can end up having the AI narrow down to a very bad solution. Here’s an example —

6/2(1 + 2)

The answer is nine. It’s not one. However, because I gave the chat AI enough history with the specific prompts I was able to get the chat AI to come up with the solution of one. And that’s wrong.

It’s not important to like AI, it’s not important to distrust AI, what’s important is that you treat AI as the tool it is. AI, Artificial Intelligence, that intelligence part is just a bunch of things interconnected together with another bunch of things. It is a model, an extrapolation of data. That’s all it is. AI only knows what’s been extrapolated. It does not know about the original data. It doesn’t know the historical context. That’s why we get black Nazis, black popes. That’s why we get these weird things involving ethics and morality from AI. AI just does not have the common sense, it just does not have the intelligence. AI doesn’t know the secret sauce. We know the secret sauce. That’s where our subject matter expertise comes into play. And we should be expected to use our subject matter expertise.

AI is good for a few things. It gives us all the pieces, allowing us to reassemble all those pieces in the right way. But we should not expect more than that from AI. At least not yet.

Email us at contactus@csuitedata.com to see how we make your plans work.